nVidia 331.13 - BETA Linux 64 bit released Oct 4 adds GPU usage

21 Oct 2013 19:58:01 UTC

Topic 197229

(moderation:

Normally i avoid betas, avoid talking about betas, avoid thinking about betas...

but http://www.geforce.co.uk/drivers/results/68392

*Added GPU utilization reporting to the nvidia-settings control panel.

So i tried an install of 331.13 which did not go too well.

I´ll just have to wait....

Language

Copyright © 2024 Einstein@Home. All rights reserved.

nVidia 331.13 - BETA Linux 64 bit released Oct 4 adds GPU usage

)

Hmm...may I ask what were the problems you experienced?

I installed this on a Scientific Linux 6.2 machine with a rather outdated kernel and it still seems to work, AND it avoids the performance problems I had seen on that machine with some of the newer drivers, described by others here: http://einsteinathome.org/node/197093&nowrap=true#126126

The nvidia-settings tool now has all the GPU-Z-like measurements like GPU load, Video engine load and PCI utilization that we have missed for so long on Linux. This could be a real improvement!

Cheers

HBE

RE: Hmm...may I ask what

)

After restarting, gdm starts in a recovery mode, and the nvidia console reports no gpus installed. I didn´t really have a lot of time tonight to spend diagnosing the problem - so a hasty retreat to 325.15!

That is good news indeed. Been a while since a driver release had added something useful.

And 331.17 is already

)

And 331.17 is already out.

3.11 and 3.12 kernels are still not supported (without the patch).

I'll rather wait for the final version.

RE: And 331.17 is already

)

I couldn´t wait so a more orderly shutdown and install of 331.17 seems to be - dare i say - betta.

I have read the instructions

)

I have read the instructions on the nvidia site for installing their drivers and the need to disable x, disable nouveau, and that if DKMS is installed you can avoid the need to reinstall the drivers when the kernel changes. DKMS would take care of this.

Since some are jumping on this beta can you provide your steps/procedures for installing drivers from the nvidia site. I installed the drivers from the nvidia site when running fedora but had mixed results. I must not have had DKMS installed because after every kernel upgrade I was forced to reinstall the drivers. Sometimes this was successful others not so. I eventually used a ppa to install drivers. This eliminated the need to reinstall drivers after a kernel upgrade.

I am currently waiting for a box to complete all WUs before I attempt a 331 install on Ubuntu 12.04, kernel 3.2.0-54-generic, with a GTX 650 Ti . If you think another thread on "installing drivers" would be useful then maybe we can provide installation procedures for the most commonly used distros. I believe this could benefit this community.

Any thoughts/suggestions?

RE: Hmm...may I ask what

)

does the output of "nvidia-smi -a" provide more info than under the previous drivers? Here is the output from an earlier driver:

==============NVSMI LOG==============

Timestamp : Fri Oct 25 14:18:28 2013

Driver Version : 304.88

Attached GPUs : 1

GPU 0000:01:00.0

Product Name : GeForce GTX 650 Ti

Display Mode : N/A

Persistence Mode : Disabled

Driver Model

Current : N/A

Pending : N/A

Serial Number : N/A

GPU UUID : GPU-709d5617-547e-ee59-b812-4dffa8f5106a

VBIOS Version : 80.06.21.00.1C

Inforom Version

Image Version : N/A

OEM Object : N/A

ECC Object : N/A

Power Management Object : N/A

GPU Operation Mode

Current : N/A

Pending : N/A

PCI

Bus : 0x01

Device : 0x00

Domain : 0x0000

Device Id : 0x11C610DE

Bus Id : 0000:01:00.0

Sub System Id : 0x842A1043

GPU Link Info

PCIe Generation

Max : N/A

Current : N/A

Link Width

Max : N/A

Current : N/A

Fan Speed : 46 %

Performance State : N/A

Clocks Throttle Reasons : N/A

Memory Usage

Total : 1023 MB

Used : 671 MB

Free : 352 MB

Compute Mode : Default

Utilization

Gpu : N/A

Memory : N/A

Ecc Mode

Current : N/A

Pending : N/A

ECC Errors

Volatile

Single Bit

Device Memory : N/A

Register File : N/A

L1 Cache : N/A

L2 Cache : N/A

Texture Memory : N/A

Total : N/A

Double Bit

Device Memory : N/A

Register File : N/A

L1 Cache : N/A

L2 Cache : N/A

Texture Memory : N/A

Total : N/A

Aggregate

Single Bit

Device Memory : N/A

Register File : N/A

L1 Cache : N/A

L2 Cache : N/A

Texture Memory : N/A

Total : N/A

Double Bit

Device Memory : N/A

Register File : N/A

L1 Cache : N/A

L2 Cache : N/A

Texture Memory : N/A

Total : N/A

Temperature

Gpu : 58 C

Power Readings

Power Management : N/A

Power Draw : N/A

Power Limit : N/A

Default Power Limit : N/A

Min Power Limit : N/A

Max Power Limit : N/A

Clocks

Graphics : N/A

SM : N/A

Memory : N/A

Applications Clocks

Graphics : N/A

Memory : N/A

Max Clocks

Graphics : N/A

SM : N/A

Memory : N/A

Compute Processes : N/A

RE: does the output of

)

No, i also thought it should, but the Nvidia X Server Settings /usr/bin/nvidia-settings does show these new values

GPU utilization typically 99% when running 2xBRP4G task

Video Engine Utilization typically 0%

PCIE bandwidth typically 18%

hth

RE: RE: does the output

)

I have just completed the installation of the 331.13 drivers on Ubuntu 12.04 with kernel "3.5.0-18-generic" and a NVIDIA 650Ti. My installation procedure added in the "xorg-edgers" into my systems software sources and updating from this ppa. Then using the ubuntu software manager (graphical) i could uninstall the old drivers and install the "331 drivers" and "331 settings"

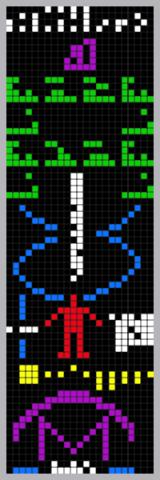

My experience with the graphical data(nvidia-settings) and the output from "nvidia-smi" agree with your findings. Not a whole lot of bang for the buck. But here is an image of the GPU data

As I write this I am continuing to down WUs from E&H. Now how does one determine their performance with this driver against a previous driver? Are you looking at valid tasks and comparing runtime/cpu time values of older jobs against current jobs crunching under the new 331 driver? Or is this some better way?

RE: ... Now how does one

)

Richard already answered a similar question you posted in the other thread and I agree with the advice he gave you. You have to compare crunch times before and after and you can't get an accurate picture from RAC alone.

Having said that, I should add that I find RAC particularly useful for spotting when something is wrong. I have a lot of hosts and I don't even try to monitor them all individually but I do regularly watch for trends in RAC. I have more than 20 hosts with a number of different GPUs, the most common being the GTX650. Because of different generations of motherboards and CPUs, there is some variation in the RACs of all hosts with GTX650s but if you take the subset of those which have Sandy Bridge or later generation mobos and CPUs, the RACs are surprisingly similar with most of the variation being attributable to the additional contribution of a quad core CPU as compared to a dual core.

As an example of how RAC is useful, here is something I've just noticed and fixed in the last few days. Most of the subset I've just mentioned have stable RACs in the 32K to 35K range. In this group there were 3 hosts with identical motherboards, identical CPUs (Celeron G540, 2.5GHz dual cores) with identical OS and driver versions - they were all built at the same time. All had RACs around 32-33K. A while ago, one had a hard disk failure so I replaced the disk and reinstalled the OS and thought nothing more about it. About a week ago, when the RAC should have really become stable, I noticed it was 24K whilst the other two were still around 32-33K. On closer inspection of task run and CPU times, I saw values averaging 24.5ksecs/1.95ksecs for the run time/cpu time pair as compared to 19.1ksecs/1.98ksecs for the other two hosts that had the full RAC.

I was very puzzled as to why this third host with the fresh OS install had such a dramatic loss of performance. Same hardware, same version of BOINC, same drivers, same everything - or so I thought. But then the penny dropped. It was the same Linux distro but it wasn't the same kernel. My distro uses a rolling release paradigm and I do keep a local mirror of the repo and a live USB containing the latest ISO image. I had updated my live USB recently and when I used it to reinstall on the replacement drive I remembered selecting a later version of the kernel compared to what I'd been using previously.

So, on the problem machine, I decided to revert to the older kernel, and lo and behold, with nothing else changed, the run times immediately dropped down to around 19ksecs in agreement with the other two. If I hadn't been casually browsing through RAC values, I probably wouldn't have noticed how that machine was under performing. I had no idea that kernel version could make such a difference.

Cheers,

Gary.

RE: Now how does one

)

Yes, after any change i look at the elapsed time of all the tasks over a couple of days, and leave the system as stable as possible.

As Gary mentioned above the RAC is good to spot a trend, also it reflects invalids and errors which sometime increase/decrease after a change of driver.

To look in detail at a host after a change i look at the job_log_einstein.phys.uwm.edu.txt file in the boinc directory, and then do something like

grep p2030 job_log_einstein* | cut -d\ -f11 | tail -25

which shows me the elapsed time last 25 BRP4G tasks,

I don´t generally pay much attention to the cpu time, which is one of the columns reported.

If i want to see how performance has changed over several months i can load parts of this file this into a spreadsheet etc.